For those not in the United Kingdom a massive scandal has erupted around allegations that one of the country’s tabloids – the News of the World ( a subsidiary of Rupert Murdoch’s News Corporation) – was illegally hacking into and listening in on the voicemails of not only the royal family members and celebrities but also murder victims and family members of soldiers killed in Afghanistan.

The fall out from the scandal, among other things, has caused the 168 year old newspaper to be unceremoniously closed, prompted an enormous investigation into the actions of editors and executives at the newspaper, forced the resignation (and arrest) of Andy Coulson – former News of the World editor and director of communications for the Prime Minister – and thrown into doubt Rupert Murdoch’s bid to gain complete control over the British satellite television network BskyB.

For those wanting to know more I encourage you to head over to the Guardian, which broke the story and has done some of the best reporting on it. Also, possibly the best piece of analysis I’ve read on the whole sordid affair is this post from reuters which essentially points out that by shutting down News of the World, Newscorp may shrewdly ensure that all incriminating documents can (legally) be destroyed. Evil genius stuff.

But why bring this all up here at eaves.ca?

Because I think this is an example of a trend in media that I’ve been arguing has been going on for some time.

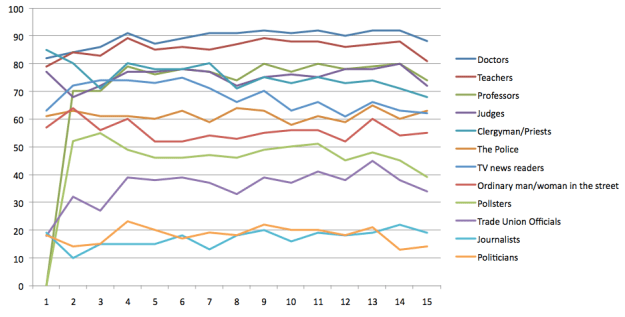

Contrary to what news people would have you believe, my sense is that most people don’t trust newspapers – no more so then they trust governments. Starting in 1983 Ipsos MORI and the British Medical Association have asked UK citizens who they trust. The results for politicians are grim. The interesting thing is, they are no better for journalists (although TV news anchors do okay). Don’t believe me? Take a look at the data tables from Ipsos MORI. Or look at the chart Benne Dezzle over at Viceland created out of the data.

There is no doubt people value the products of governments and the media – but this data suggests they don’t trust the people creating them, which I really think is a roundabout way of saying: they don’t trust the system that creates the news.

I spend a lot of my time arguing that government’s need to be more transparent, and that this (contrary to what many public servants feel) will make them more, not less, effective. Back in 2009, in reaction to the concern that the print media was dying, I wrote a blog post saying the same was true for journalism. Thanks, in part, to Jay Rosen listing it as part of his flying seminar on the future of news, it became widely read and ended up as getting reprinted along with Taylor Owen and I’s article Missing the Link, in the journalism textbook The New Journalist. Part of what I think is going in the UK is a manifestation of the blog post, so if you haven’t read it, I think now is as good a time as any.

The fact is, newsrooms are frequently as opaque (both in process and, sometimes, in motivations) as governments are. People may are willing to rely on them, and they’ll use them if their outputs are good, but they’ll turn on them, and quickly, if they come to understand that the process stinks. This is true of any organization and news media doesn’t get a special pass because of the job it plays – indeed the opposite may be true. But more profoundly I think it is interesting how what many people consider to be two of the key pillars to western democracy are staffed by people who are among the least trusted in our society. Maybe that’s okay. But maybe it’s not. But if we think we need better forms of government – which many people seem to feel we do – it may also be that we believe we need better ways of generating, managing and engaging in the accountability of that government.

Of course, I don’t want to overplay the situation here. News of the World doomed itself because it broke the law. More importantly, it did so in a truly offensive way: hacking into the cell phone of a murder victim who was an everyday person. Admitedly, when the victims were celebrities, royals and politicians, it percolated as a relatively contained scandal. But if we believe that transparency is the sunlight that causes governments to be less corrupt – or at least forces politicians to recognize their decisions will be more scrutinized – maybe a little transparency might have caused the executives and editors at News Corp to behave a little better as well. I’m not sure what a more open media organization might look like – although wikipedia does an interesting job – but from both a brand protection and values based decision making perspective a little transparency could be the right incentive to ensure that the journalists, editors and executives in a news system few of us seem to trust, behave a little better. And that might cause them to earn more of the trust I think many deserve.