Please read background below for more info. Here’s the skinny.

What

A one day mini-conference, held (tentatively) in Vancouver on January 14th San Francisco on January 21st and 22nd, 2014 (remote participating possible) for Mozillians about community metrics and dashboards.

Update: Apologies for the change of date and location, this event has sparked a lot of interest and so we had to change it so we could manage the number of people.

Why?

It turns out that in the past 2-3 years a number of people across Mozilla have been tinkering with dashboards and metrics in order to assess community contributions, effectiveness, bottlenecks, performance, etc… For some people this is their job (looking at you Mike Hoye) for others this is something they arrived at by necessity (looking at you SUMO group) and for others it was just a fun hobby or experiment.

Certainly I (and I believe co-collaborators Liz Henry and Mike Hoye) think metrics in general and dashboards in particular can be powerful tools, not just to understand what is going in the Mozilla Community, but as a way to empower contributors and reduce the friction to participating at Mozilla.

And yet as a community of practice, I’m not sure those interested in converting community metrics into some form of measurable output have ever gathered together. We’ve not exchanged best practices, aligned around a common nomenclature or discussed the impact these dashboards could have on the community, management and other aspects of Mozilla.

Such an exercise, we think, could be productive.

Who

Who should come? Great question. Pretty much anyone who is playing around with metrics around community, participation, or something parallel at Mozilla. If you are interested in participating please contact sign up here.

Who is behind this? I’ve outlined more in the background below, but this event is being hosted by myself, Mike Hoye (engineering community manager) and Liz Henry (bugmaster)

Goal

As you’ve probably gathered the goals are to:

- Get a better understanding of what community metrics and dashboards exist across Mozilla

- Learn about how such dashboards and metrics are being used to engage, manage or organize communities and/or influence operations

- Exchange best around both the development of and use/application of dashboards and metrics

- Stretch goal – begin to define some common definitions for metrics that exists across mozilla to enable portability of metrics across dashboards.

Hope this sounds compelling. Please feel free to email or ping me if you have questions.

—–

Background

I know that my cocollaborators – Mike Hoye and Liz Henry have their own reasons for ending up here. I, as many readers know, am deeply interested in understanding how open source communities can combine data and analytics with negotiation and management theory to better serve their members. This was the focus on my keynote at OSCON in 2012 (posted below).

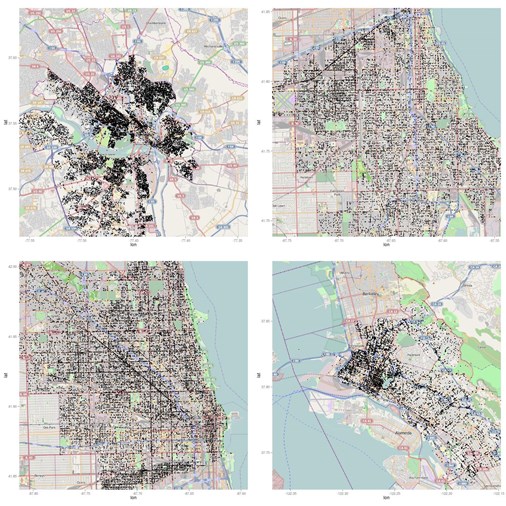

For several years I tried with minimal success to create some dashboards that might provide an overview of the community’s health as well as diagnose problems that were harming growth. Despite my own limited success, it has been fascinating to see how more and more individuals across Mozilla – some developers, some managers, others just curious observers – have been scrapping data they control of can access to create dashboards to better understand what is going on in their part of the community. The fact is, there are probably at least 15 different people running community oriented dashboards across Mozilla – and almost none of us are talking to one another about it.

At the Mozilla Summit in Toronto after speaking with Mike Hoye (engineering community manager) and Liz Henry (bugmaster) I proposed that we do a low key mini conference to bring together the various Mozilla stakeholders in this space. Each of us would love to know what others at Mozilla are doing with dashboards and to understand how they are being used. We figured if we wanted to learn from others who were creating and using dashboards and community metrics data – they probably do to. So here we are!

In addition to Mozillians, I’d also love to invite an old colleague, Diederik van Liere, who looks at community metrics for the Wikimedia foundation, as his insights might also be valuable to us.