Those on twitter will already know that this morning I had the privilege of conducting a press conference with Minister Day about the launch of data.gc.ca – the Federal Government’s Open Data portal. For those wanting to learn more about open data and why it matters, I suggest this and this blog post, and this article – they outline some of the reasons why open data matters.

In this post I want to review what works, and doesn’t work, about data.gc.ca.

What works

Probably the most important thing about data.gc.ca is that it exists. It means that public servants across the Government of Canada who have data they would like to share can now point to a website that is part of government policy. It is an enormous signal of permission from a central agency that will give a number of people who want to share data permission, a process and a vehicle, by which to do this. That, in of itself, is significant.

Indeed, I was informed that already a number of ministries and individuals are starting to approach those operating the portal asking to share their data. This is exactly the type of outcome we as citizens should want.

Moreover, I’ve been told that the government wants to double the number of data sets, and the number of ministries, involved in the site. So the other part that “works” on this site is the commitment to make it bigger. This is also important, as there have been some open data portals that have launched with great fanfare, only to have the site languish as neither new data sets are added and the data sets on the site are not updated and so fall out of date.

What’s a work in progress

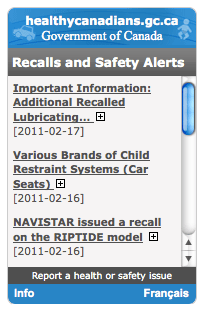

The number of “high value” datasets is, relatively speaking, fairly limited. I’m always cautious about this as, I feel, what constitutes high value varies from user to user. That said, there are clearly data sets that will have greater impact on Canadians: budget data, line item spend data by department (as the UK does), food inspection data, product recall data, pretty much everything on the statscan website, Service Canada locations, postal code data and, mailbox location data, business license data, Canada Revenue data on charities and publicly traded companies are all a few that quickly come to mind, clearly I can imagine many, many more…

I think the transparency, tech, innovation, mobile and online services communities will be watching data.gc.ca closely to see what data sets get added. What is great is that the government is asking people what data sets they’d like to see added. I strongly encourage people to let the government know what they’d like to see, especially when it involves data the government is already sharing, but in unhelpful formats.

What doesn’t work

In a word: the license.

The license on data.gc.ca is deeply, deeply flawed. Some might go so far as to say that the license does not make it data open at all – a critique that I think is fair. I would say this: presently the open data license on data.gc.ca effectively kills any possible business innovation, and severally limits the use in non-profit realms.

The first, and most problematic is this line:

“You shall not use the data made available through the GC Open Data Portal in any way which, in the opinion of Canada, may bring disrepute to or prejudice the reputation of Canada.”

What does this mean? Does it mean that any journalist who writes a story, using data from the portal, that is critical of the government, is in violation of the terms of use? It would appear to be the case. From an accountability and transparency perspective, this is a fatal problem.

But it is also problematic from a business perspective. If one wanted to use a data set to help guide citizens around where they might be well, and poorly, served by their government, would you be in violation? The problem here is that the clause is both sufficiently stifling and sufficiently negative that many businesses will see the risk of using this data simply too great.

UPDATE: Thursday March 17th, 3:30pm, the minister called me to inform me that they would be striking this clause from the contract. This is excellent news and Treasury Board deserves credit for moving quickly. It’s also great recognition that this is a pilot (e.g. beta) project and so hopefully, the other problems mentioned here and in the comments below will also be addressed.

It is worth noting that no other open data portal in the world has this clause.

The second challenging line is:

“you shall not disassemble, decompile except for the specific purpose of recompiling for software compatibility, or in any way attempt to reverse engineer the data made available through the GC Open Data Portal or any part thereof, and you shall not merge or link the data made available through the GC Open Data Portal with any product or database for the purpose of identifying an individual, family or household or in such a fashion that gives the appearance that you may have received or had access to, information held by Canada about any identifiable individual, family or household or about an organization or business.”

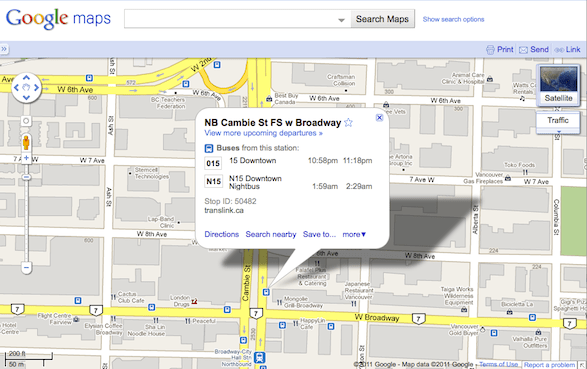

While I understand the intent of this line, it is deeply problematic for several reasons. First, many business models rely on identifying individuals, indeed, frequently individuals ask businesses to do this. Google, for example, knows who I am and offers custom services to me based on the data they have about me. It would appear that terms of use would prevent Google from using Government of Canada data to improve its service even if I have given them permission. Moreover, the future of the digital economy is around providing customized services. While this data has been digitized, it effectively cannot be used as part of the digital economy.

More disconcerting is that these terms apply not only to individuals, but also to organizations and businesses. This means that you cannot use the data to “identify” a business. Well, over at Emitter.ca we use data from Environment Canada to show citizens facilities that pollute near them. Since we identify both the facilities and the companies that use them (not to mention the politicians whose ridings these facilities sit in), are we not in violation of the terms of use? In a similar vein, I’ve talked about how government data could have prevented $3B of tax fraud. Sadly, data from this portal would not have changed that since, in order to have found the fraud, you’d have to have identified the charitable organizations involved. Consequently, this requirement manifestly destroys any accountability the data might create.

It is again worth noting that no other open data portal in the world has this clause.

And finally:

4.1 You shall include and maintain on all reproductions of the data made available through the GC Open Data Portal, produced pursuant to section 3 above, the following notice:

Reproduced and distributed with the permission of the Government of Canada.

4.2 Where any of the data made available through the GC Open Data Portal is contained within a Value-Added Product, you shall include in a prominent location on such Value-Added Product the following notice:

This product has been produced by or for (your name – or corporate name, if applicable) and includes data provided by the Government of Canada.

The incorporation of data sourced from the Government of Canada within this product shall not be construed as constituting an endorsement by the Government of Canada of our product.

or any other notice approved in writing by Canada.

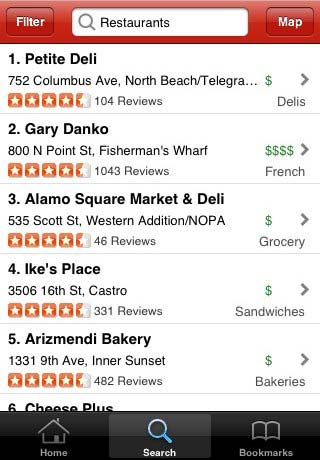

The problem here is that this creates what we call the “Nascar effect.” As you use more and more government data, these “prominent” displays of attribution begin to pile up. If I’m using data from 3 different governments, each that requires attribution, pretty soon all your going to see are the attribution statements, and not the map or other information that you are looking for! I outlined this problem in more detail here. The UK Government has handled this issue much, much more gracefully.

Indeed, speaking of the UK Open Government License, I really wish our government had just copied it wholesale. We have a similar government system and legal systems so I see no reason why it would not easily translate to Canada. It is radically better than what is offered on data.gc.ca and, by adopting it, we might begin to move towards a single government license within Commonwealth countries, which would be a real win. Of course, I’d love it if we adopted the PDDL, but the UK Open Government License would be okay to.

In Summary

The launch of data.gc.ca is an important first step. It gives those of us interested in open data and open government a vehicle by which to get more data open and improve the accountability, transparency as well as business and social innovation. That said, there is much work to be done still: getting more data up and, more importantly, addressing the significant concerns around the license. I have spoken to Treasury Board President Stockwell Day about these concerns and he is very interested and engaged by them. My hope is that with more Canadians expressing their concerns, and with better understanding by ministerial and political staff, we can land on the right license and help find ways to improve the website and program. That’s why we to beta launches in the tech world, hopefully it is something the government will be able to do here too.

Apologies for any typos, trying to get this out quickly, please let me know if you find any.

So while the app is fairly light on features today… I can imagine a future where it becomes significantly more engaging and comprehensive, using open data on the data and city services to show maps of where and how money is spent, as well as post reminders for in person meet ups, tours of facilities, and dial in townhall meetings. The best way to get to these more advanced features is to experiment with getting the lighter features right today. The challenge for Calgary on this front is that it seems to have no plans for sharing much data with the public (that I’ve heard of), it’s open data portal has few offerings and its design is sorely lacking. Ultimately, if you want to consult citizens on planning and the budget it might be nice to go beyond surveys and share more raw data and information with them, it’s a piece of the puzzle I think will be essential. This is something no city seems to be tackling with any gusto and, along with crime data, is emerging as a serious litmus test of a city’s intention to be transparent.

So while the app is fairly light on features today… I can imagine a future where it becomes significantly more engaging and comprehensive, using open data on the data and city services to show maps of where and how money is spent, as well as post reminders for in person meet ups, tours of facilities, and dial in townhall meetings. The best way to get to these more advanced features is to experiment with getting the lighter features right today. The challenge for Calgary on this front is that it seems to have no plans for sharing much data with the public (that I’ve heard of), it’s open data portal has few offerings and its design is sorely lacking. Ultimately, if you want to consult citizens on planning and the budget it might be nice to go beyond surveys and share more raw data and information with them, it’s a piece of the puzzle I think will be essential. This is something no city seems to be tackling with any gusto and, along with crime data, is emerging as a serious litmus test of a city’s intention to be transparent.