Increasingly governments are looking for new and more impactful ways to communicate with citizens. There is a slow but growing awareness that traditional sources of outreach, such as TV stories and newspaper advertisements are either not reaching a significant portion of the population and/or have little impact on raising awareness of a given issue.

The exciting thing about this is that there is some real innovation taking place in governments as they grapple with this challenge. This blog post will look at one example from Canada and talk about why the innovation pioneered to date – while a worthy effort – falls far short of its potential. Specifically, I’m going to talk about how when governments share data, even when they use new technologies, they remain stuck in a government-centric approach that limits effectiveness. The real impact of new technology won’t come until governments begin to think more radically in terms of citizen-centric approaches.

The dilemma around reaching citizens is probably felt most acutely in areas where there is a greater sense of urgency around the information – like, say, in issues relating to health and safety. Consequently, in Canada, it is perhaps not surprising to see that some of the more innovative outreach work has thus been pioneered by the national agency responsible for many of these issues, Health Canada.

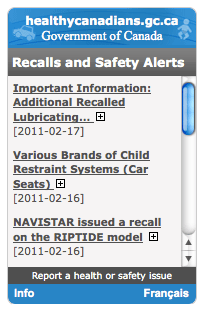

The most cutting edge stuff I’ve seen is an effort by Health Canada to share advisories from Health Canada, Transport Canada and the Canadian Food Inspection Agency via three vehicles: an RSS feed, a mobile app available for Blackberry, iPhone (pictured far right) and Android, and finally as a widget (pictured near right) that anyone can install into their blog.

The most cutting edge stuff I’ve seen is an effort by Health Canada to share advisories from Health Canada, Transport Canada and the Canadian Food Inspection Agency via three vehicles: an RSS feed, a mobile app available for Blackberry, iPhone (pictured far right) and Android, and finally as a widget (pictured near right) that anyone can install into their blog.

I think all of these are interesting ideas and have much to commend them. It is great to see information of a similar type, from three different agencies, being shared through a single vehicle – this is definitely a step forward from a user’s perspective. It’s also nice to see the government experiment with different vehicles for delivery (mobile and other parties’ websites).

But from a citizen-centric perspective, all these innovations share a common problem: They don’t fundamentally change the citizen’s experience with this information. In other words, they are simply efforts to find new ways to “broadcast” the information. As a result, I predict that these intiatives will have a minimal impact as currently structured. There are two reasons why:

The problem isn’t about access: These tools are predicated on the idea that the problem to conveying this information is about access to the information. It isn’t. The truth is, people don’t care. We can debate about whether they should care but the fact of the matter is, they don’t. Most people won’t pay attention to a product recall until someone dies. In this regard these tools are simply the modern day version of newspaper ads, which, historically, very few people actually paid attention to. We just couldn’t measure it, so we pretended like people read them.

The content misses the mark: Scrape a little deeper on these tools and you’ll notice something. They are all, in essence, press releases. All of these tools, the RSS feed, blog widget and mobile apps, are simply designed to deliver a marginally repackaged press release. Given that people tuned out of newspaper ads, pushing these ads onto them in another device will likely have a limited impact.

As a result, I suspect that those likely to pay attention to these innovations were probably those who were already paying attention. This is okay and even laudable. There is a small segment of people for whom these applications reduce the transactions costs of access. However, with regard to expanding the numbers of Canadians impacted my this information or changing behaviour in a broader sense, these tools have limited impact. To be blunt, no one is checking a mobile application before they buy a product, nor are they reading these types of widgets in a blog, nor is anyone subscribing to an RSS feed of recalls and safety warnings. Those who are, are either being paid to do so (it is a requirement of their job) or are fairly obsessive.

In short, this is a government-centric solution – it seeks to share information the government has, in a context that makes sense to government – it is not citizen-centric, sharing the information in a form that matters to citizens or relevant parties, in a context that makes sense to them.

Again, I want to state while I draw this conclusion I still applaud the people at Health Canada. At least they are trying to do something innovative and creative with their data and information.

So what would a citizen-centric approach look like? Interestingly, it would involve trying to reach out to citizens directly.

People are wrestling with a tsunami of information. We can’t simply broadcast them with information, nor can we expect them to consult a resource every time they are going to make a purchase.

What would make this data far more useful would be to structure it so that others could incorporate it into software and applications that could shape people’s behaviors and/or deliver the information in the right context.

Take this warning, for example: “CERTAIN FOOD HOUSE BRAND TAHINI OF SESAME MAY CONTAIN SALMONELLA BACTERIA” posted on Monday by the Canadian Food Inspection Agency. There is a ton of useful information in this press release including things like:

The geography impacted: Quebec

The product name, size and better still the UPC and LOT codes.

| Product | Size | UPC | Lot codes |

|---|---|---|---|

| Tahini of Sesame | 400gr | 6 210431 486128 | Pro : 02/11/2010 and Exp : 01/11/2012 |

| Tahini of Sesame | 1000gr | 6 210431 486302 | Pro: 02/11/2010 and Exp: 01/11/2012 |

| Premium Halawa | 400gr | 6 210431 466120 | Pro: 02/11/2010 and Exp: 01/11/2012 |

| Premium Halawa | 1000gr | 6 210431 466304 | Pro: 02/11/2010 and Exp: 01/11/2012 |

However, all this information is buried in the text so is hard to parse and reuse.

If the data was structured and easily machine-readable (maybe available as an API, but even as a structured spreadsheet) here’s what I could imagine happening:

- Retailers could connect the bar code scanners they use on their shop floors to this data stream. If any cashier swipes this product at a check out counter they would be immediately notified and would prevent the product from being purchased. This we could do today and would be, in my mind, of high value – reducing the time and costs it takes to notify retailers as well as potentially saving lives.

- Mobile applications like RedLaser, which people use to scan bar codes and compare product prices could use this data to notify the user that the product they are looking at has been recalled. Apps like RedLaser still have a small user base, but they are growing. Probably not a game changer, but at least context sensitive.

- I could install a widget in my browser that, every time I’m on a website that displays that UPC and/or Lot code would notify me that I should not buy that product and that it’s been recalled. Here the potential is significant, especially as people buy more and more goods over the web.

- As we move towards having “smart” refrigerators that scan the RFID chips on products to determine what is in the fridge, they could simply notify me via a text message that I need to throw out my jar of Tahini of Sesame. This is a next generation use, but the government would be pushing private sector innovation in the space by providing the necessary and useful data. Every retailer is going to want to sell a “smart” fridge that doubles as a “safe” fridge, telling you when you’ve got a recalled item in it.

These are all far more citizen-centric, since they don’t require citizens to think, act or pay attention. In short, they aren’t broadcast-oriented, they feel customized, filtering information and delivering it where citizens need it, when they need it, sometimes without them even needing to know. (This is the same argument I made in my How Yelp Could Help Save Millions in Healthcare Costs). The most exciting thing about this is that Health Canada already has all the data to do this, it’s just a question of restructuring it so it is of greater use to various consumers of the data – from retailers, to app developers, to appliance manufactuers. This should not cost that much. (Health Canada, I know a guy…)

Another advantage of this approach is that it also gets the Government out of the business of trying to find ways to determine the best and most helpful way to share information. This appears to be a problem the UK government is also interested in solving. Richard A. sent me this excellent link in which a UK government agency appeals to the country’s developers to help imagine how it can better share information not unlike that being broadcast by Health Canada.

However, at the end of the day even this British example falls into the same problem – believing that the information is most helpfully shared through an app. The real benefit of this type of information (and open data in general) won’t be when you can create a single application with it, but when you can embed the information into systems and processes so that it can notify the right person at the right time.

That’s the challenge: abandoning a broadcast mentality and making things available for multiple contexts and easily embeddable. It’s a big culture shift, but for any government interested in truly exploring citizen-centric approach, it’s the key to success.