Below is a extended blog post that summarizes the keynote address I gave at the World Bank/Data.gov International Open Government Data Conference in Washington DC on Wednesday July 11th. This piece is cross posted over at the WeGov blog on TechPresident where I’m also write on transparency, technology and politics.

Yesterday, after spending the day at the International Open Government Data Conference at the World Bank (and co-hosted by data.gov) I left both upbeat and concerned. Upbeat because of the breadth of countries participating and the progress being made.

I was worried however because of the type of conversation we are having how it might limit the growth of both our community and the impact open data could have. Indeed as we talk about technology and how to do open data we risk missing the real point of the whole exercise – which is about use and impacts.

To get drive this point home I want to share three stories that highlight the challenges, I believe, we should be talking about.

Challenge 1: Scale Open Data

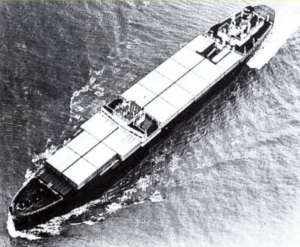

In 1956 Ideal-X, the ship pictured to the left, sailed from Newark to Houston and changed the world.

In 1956 Ideal-X, the ship pictured to the left, sailed from Newark to Houston and changed the world.

Confused? Let me explain.

As Marc Levine chronicles in his excellent book The Box, the world in 1956 was very different to our world today. Global trade was relatively low. China was a long way off from becoming the world’s factory floor. And it was relatively unusual for people to buy goods made elsewhere. Indeed, as Levine puts it, the cost of shipping goods was “so expensive that it did not pay to ship many things halfway across the country, much less halfway around the world.” I’m a child of the second era of globalization. I grew up in a world of global transport and shipping. The world before all of that which Levine is referring to is actually foreign to me. What is amazing is how much of that has just become a basic assumption of life.

And this is why Ideal-X, the aforementioned ship, is so important. It is the first cargo container ship (in how we understand containers). Its trip from Newark to Houston marked the beginning of a revolution because containers slashed the cost of shipping goods. Before Ideal-X the cost of loading cargo onto a medium sized cargo ship was $5.83 per ton, with containers, the cost dropped to 15.8 cents. Yes, the word you are looking for is: “wow.”

You have to understand that before containers loading a ship was a lot more like packing a mini-van for a family vacation to the beach than the orderly process of what could be described as stacking very large lego blocks on a boat. Before containers literally everything had to be hand packed, stored and tied down in the hull. (see picture to the right)

You have to understand that before containers loading a ship was a lot more like packing a mini-van for a family vacation to the beach than the orderly process of what could be described as stacking very large lego blocks on a boat. Before containers literally everything had to be hand packed, stored and tied down in the hull. (see picture to the right)

This is a little bit what our open data world looks like right today. The people who are consuming open data are like digital longshoreman. They have to look at each open data set differently, unpack it accordingly and figure out where to put it, how to treat it and what to do with it. Worse, when looking at data from across multiple jurisdictions it is often much like cargo going around the world before 1956: a very slow and painful process. (see man on the right).

Of course, the real revolution in container shipping happened in 1966 when the size of containers was standardized. Within a few years containers could move from pretty much anywhere in the world from truck to train to boat and back again. In the following decades global shipping trade increased by 2.5 times the rate of economic output. In other words… it exploded.

Geek side bar: For techies, think of shipping containers as the TCP-IP packet of globalization. TCP-IP standardized the packet of information that flowed over the network so that data could move from anywhere to anywhere. Interestingly, like containers, what was in the package was actually not relevant and didn’t need to be known by the person transporting it. But the fact that it could move anywhere created scale and allowed for logarithmic growth.

Geek side bar: For techies, think of shipping containers as the TCP-IP packet of globalization. TCP-IP standardized the packet of information that flowed over the network so that data could move from anywhere to anywhere. Interestingly, like containers, what was in the package was actually not relevant and didn’t need to be known by the person transporting it. But the fact that it could move anywhere created scale and allowed for logarithmic growth.

What I’m trying to drive at is that, when it comes to open data, the number of open data sets that gets published is no longer the critical metric. Nor is the number of open data portals. We’ve won. There are more and more. The marginal political and/or persuasive benefit of an addition of another open data portal or data set won’t change the context anymore. I want to be clear – this is not to say that more open data sets and more open data portals are not important or valuable – from a policy and programmatic perspective more is much, much better. What I am saying is that having more isn’t going to shift the conversation about open data any more. This is especially true if data continues to require large amounts of work and time for people to unpack and understanding it over and over again across every portal.

In other words, what IS going to count, is how many standardized open data sets get created. This is what we SHOULD be measuring. The General Transit Feed Specification revolutionized how people engaged with public transit because the standard made it so easy to build applications and do analysis around it. What we need to do is create similar standards for dozens, hundreds, thousands of other data sets so that we can drive new forms of use and engagement. More importantly we need to figure out how to do this without relying on a standards process that take 8 to 15 to infinite years to decide on said standard. That model is too slow to serve us, and so re-imaging/reinventing that process is where the innovation is going to shift next.

So let’s stop counting the number of open data portals and data sets, and start counting the number of common standards – because that number is really low. More critically, if we want to experience the kind of explosive growth in use like that experienced by global trade and shipping after the rise of the standardized container then our biggest challenge is clear: We need to containerize open data.

Challenge 2: Learn from Facebook

One of the things I find most interesting about Facebook is that everyone I’ve talked to about it notes how the core technology that made it possible was not particularly new. It wasn’t that Zuckerberg leveraged some new code or invented a new, better coding language. Rather it was that he accomplished a brilliant social hack.

One of the things I find most interesting about Facebook is that everyone I’ve talked to about it notes how the core technology that made it possible was not particularly new. It wasn’t that Zuckerberg leveraged some new code or invented a new, better coding language. Rather it was that he accomplished a brilliant social hack.

Part of this was luck, that the public had come a long way and was much more willing to do social things online in 2004 than they were willing to do even two years earlier with sites like friendster. Or, more specifically, young people who’d grown up with internet access were willing to do things and imagine using online tools in ways those who had not grown up with those tools wouldn’t or couldn’t. Zuckerberg, and his users, had grown up digital and so could take the same tools everyone else had and do something others hadn’t imagined because their assumptions were just totally different.

My point here is that, while it is still early, I’m hoping we’ll soon have the beginnings of a cohort of public servants who’ve “grown up data.” For whom, despite their short career in the public service, have matured in a period where open data has been an assumption, not a novelty. My hope and suspicion is that this generation of public servants are going to think about Open Data very differently than many of us do. Most importantly, I’m hoping they’ll spur a discussion about how to use open data – not just to share information with the public – but to drive policy objectives. The canonical opportunity for me around this remains restaurant inspection data, but I know there are many, many, more.

What I’m trying to say is that the conferences we organize have got to talk less and less about how to get data open and have to start talking more about how do we use data to drive public policy objectives. I’m hoping the next International Open Government Data Conference will have an increasing number of presentations by citizens, non-profits and other outsiders are using open data to drive their agenda, and how public servants are using open data strategically to drive to a outcome.

I think we have to start fostering that conversation by next year at the latest and that this conversation, about use, has to become core to everything we talk about within 2 years, or we will risk losing steam. This is why I think the containerization of open data is so important, as well as why I think the White House’s digital government strategy is so important since it makes internal use core to the governments open data strategy.

Challenge 3: The Culture and Innovation Challenge.

In May 2010 I gave this talk on Open Data, Baseball and Government at the Gov 2.0 Summit in Washington DC. It centered around the story outline in the fantastic book Moneyball by Michael Lewis. It traces the story about how a baseball team – the Oakland A’s – used a new analysis of players stats to ferret out undervalued players. This enabled them to win a large number of games on a relatively small payroll. Consider the numbers to the right.

In May 2010 I gave this talk on Open Data, Baseball and Government at the Gov 2.0 Summit in Washington DC. It centered around the story outline in the fantastic book Moneyball by Michael Lewis. It traces the story about how a baseball team – the Oakland A’s – used a new analysis of players stats to ferret out undervalued players. This enabled them to win a large number of games on a relatively small payroll. Consider the numbers to the right.

I mean if you are the owner of the Texas Rangers, you should be pissed! You are paying 250% in salary for 25% fewer wins than Oakland. If this were a government chart, where “wins” were potholes found and repaired, and “payroll” was costs… everyone in the world bank would be freaking out right now.

For those curious, the analytical “hack” was recognizing that the most valuable thing a player can do on offense is get on base. This is because it gives them an opportunity to score (+) but it also means you don’t burn one of your three “outs” that would end the inning and the chance for other players to score. The problem was, to measure the offensive power of a player, most teams were looking at hitting percentages (along with a lot of other weird, totally non-quantitative stuff) which ignores the possibility of getting walked, which allows you to get on base without hitting the ball!

What’s interesting however is that the original thinking about the fact that people were using the wrong metrics to assess baseball players first happened decades before the Oakland “A”s started to use it. Indeed it was a nighttime security guard with a strong mathematics background and an obsession for baseball that first began point this stuff out.

The point I’m making is that it took 20 years for a manager in baseball to recognize that there was better evidence and data they could be using to make decisions. TWENTY YEARS. And that manager was hated by all the other managers who believed he was ruining the game. Today, this approach to assessing baseball is common place – everyone is doing it – but see how the problem of using baseball’s “open data” to create better outcomes was never just an accessibility issue. Once that was resolved the bigger challenge centered around culture and power. Those with the power had created a culture in which new ideas – ideas grounded in evidence but that were disruptive – couldn’t find an audience. Of course, there were structural issues as well, many people had jobs that depended on not using the data, on instead relying on their “instincts” but I think the cultural issue is a significant one.

The point I’m making is that it took 20 years for a manager in baseball to recognize that there was better evidence and data they could be using to make decisions. TWENTY YEARS. And that manager was hated by all the other managers who believed he was ruining the game. Today, this approach to assessing baseball is common place – everyone is doing it – but see how the problem of using baseball’s “open data” to create better outcomes was never just an accessibility issue. Once that was resolved the bigger challenge centered around culture and power. Those with the power had created a culture in which new ideas – ideas grounded in evidence but that were disruptive – couldn’t find an audience. Of course, there were structural issues as well, many people had jobs that depended on not using the data, on instead relying on their “instincts” but I think the cultural issue is a significant one.

So we can’t expect that we are going to go from open portal today to better decisions tomorrow. There is a good chance that some of the ideas data causes us to think will be so radical and challenging that either the ideas, the people who champion them, or both, could get marginalized. On the up side, I feel like I’ve seen some evidence to the contrary to this in city’s like New York and Chicago, but the risk is still there.

So what are we going to do to ensure that the culture of government is one that embraces the challenges to our thinking and assumptions that doesn’t require 20 years to pass for us to make progress. This is a critical challenge for us – and it is much, much bigger than open data.

Conclusion: Focus on the Dark Matter

I’m deeply indebted to my friend – the brilliant Gordon Ross – who put me on to this idea the other day over tea.

Do you remember the briefcase in Pulp Fiction? The on that glowed when opened? That the characters were all excited about but you never knew what was inside it. It’s called a MacGuffin. I’m not talking about the briefcase per se. Rather I mean the object in a story that all the characters are obsessed about, but that you – the audience – never find out what it is, and frankly, really isn’t that important to you. In Pulp Fiction I remember reading that the briefcase is allegedly Marsellus Wallace soul. But ultimately, it doesn’t matter. What matters is that Vincent Vega, Jules Winnfield and a ton of other characters think it is important, and that drives the action and the plot forward.

Again – let me be clear – Open Data Portals are our MacGuffin device. We seem to care A LOT about them. But trust me, what really matters is everything that can happens around them. What makes open data important is not a data portal. It is a necessary prerequisite but it’s not the end, it just the means. We’re here because we believe that the things open data can let us and others do, matter. The Open Data portal was only ever a MacGuffin device – something that focused our attention and helped drive action so that we could do the other things – that dark matter that lies all around the MacGuffin device.

And that is what brings me back to our three challenges. Right now, the debate around open data risks become too much like a Pulp Fiction conference in which all the panels talk about the briefcase. Instead we should be talking more and more about all the action – the dark matter – taking place around the briefcase. Because that is what is really matters. For me, I think the three things that matter most are what I’ve mentioned about in this talk:

- standards – which will let us scale, I believe strongly that the conversation is going to shift from portals to standards

- strategic use – starting us down the path of learning how open data can drive policy outcomes; and

- culture and power – recognizing that there are lots of open data is going to surface a lot of reasons why governments don’t want to engage data driven in decision making

In other words, I want to be talking about how open data can make the world a better place, not about how we do open data. That conversation still matters, open data portals still matter, but the path forward around them feels straightforward, and if they remain the focus we’ll be obsessing about the wrong thing.

So here’s what I’d like to see in the future from our Open Data conferences. We got to stop talking about how to do open data. This is because all of our efforts here, everything we are trying to accomplish… it has nothing to do with the data. What I think we want to be talking about is how open data can be a tool to make the world a better place. So let’s make sure that is the conversation we are have.