I reley dont wan to say this, but I have to now.

– Axman13

Really, it is.

The back and forth for and against UBB has – for me – sadly so missed the mark on the real issue it is beyond frustrating. It’s been nice to see a voice or two like Michael Geist begin to note the real issue – lack of competition – but by and large, we continue to have the wrong debate and, more importantly, blame the wrong people.

This really struck home with me while reading David Beers’s piece in the Globe. The first half is fantastic, demanding greater transparency into the cost of delivering bandwidth and of developing a network; this is indeed needed. Sadly, the second half completely lost me, as it makes no argument, notably doesn’t call for foreign investment (though he wants the telcos’ oligarchy broken up – so it’s unclear where he thinks that competition is going to come from) and worse, the CRTC is blamed for the crisis.

It all prompted me – reluctantly – to outline what I think are the three problems with the debate we’ve been having, from least to most important.

1. It’s not about UBB; it’s about cost.

Are people mad about UBB? Maybe. However, I’m willing to wager that most people who have signed the petition about UBB don’t know what UBB is, or what it means. What they do know is that their Internet Service Provider has been charging a lot for internet access. Too much. And they are tired of it.

A more basic question is: do people hate the telcos? And the answer is yes. I know I dislike them all. Intensely. (You should see my cell phone bill; my American friends laugh at me as it is 4x theirs). The recent decision has simply allowed for that frustration to boil over. It has become yet another example of how a telecommunication oligarchy is allowed to charge whatever it wants for service that is substandard to what is often found elsewhere in the world. Of course Canadians are angry. But I suspect they were angry before UBB.

So, if getting gouged is the issue the problem of making the debate about UBB is we risk taking our eye off the real issue – the cost of getting online. Even if the CRTC reverses its decision, we will still be stuck with some of the highest rates in for internet access in the world. This is the real issue and should be the focus of the debate.

2. If the real issue is about price, the real solution is competition.

Here Geist’s piece, along with the Globe editorial, is worth reading. Geist states clearly that the root of the problem is a lack of competition. It may be that UBB – especially in a world of WiMax or other highspeed wireless solutions – could become the most effective way to charge for access and encourage investment. Why would we want to forestall such a business model from emerging?

I’m hoping, and am seeing hints, that that this part of the debate is beginning to come to the fore, but so long as the focus is on banning UBB, and not increasing competition, we’ll be stuck having the wrong conversation.

3. The real enemy is not the CRTC; it’s the Minister of Industry Canada.

This, of course, is both the most problematic part of this debate and the most ironic. The opponents to UBB have made the wrong people the enemy. While people may not agree with the CRTC’s decision, the real problem is not of their making. They can only act within the regulatory regime they have been given.

The truth of the matter is, after 40 years of the internet, Canada has no digital economy strategy. Given it is 2011 this is a stunning fact. Of course, we’ve been promised one but we’ve heard next to nothing about it since the consultation has been closed. Indeed – and I hope that I’ll be corrected here – we haven’t even heard when it will land.

The point, to make it clear, is that this is not a crisis or regulatory oversight. This is a crisis of policy mismanagement. So the real people to blame are the politicians – and in particular the Industry Minister who is in charge of this file. But since those in opposition to UBB have made it their goal to scream at the CRTC, the government has been all too happy to play along and scream at them as well. Indeed, the biggest irony of this all is that it has allowed the government to take a populist approach and look responsive to a crises that they are ultimately responsible for.

P.S. Left-wing bonus reason

So if you are a left leaning anti-UBB advocate – particularly one who publishes opinion pieces – the most ironic part about this debate is that you are the PMO’s favourite person. Not only have you deflected blame over this crisis away from the government and onto the CRTC you’ve created the perfect conditions for the government to demand an overhaul (or simply just the resignation) of key people on the CRTC board.

The only reson this is ironic is beacuase Konrad W. von Finckenstein (head of the CRTC) may be the main reason why Sun Media’s Category 1 application for its “Fox News North” channel was denied. There is probably nothing the PMO would like more than to brush Kinchenstein aside and be to reshape the CRTC so as to make this plan a reality.

Wouldn’t it be ironic if a left-leaning coalition enabled the Harper PMO and Sun Media to create their Fox News North?

And who said Canadian politics was boring?

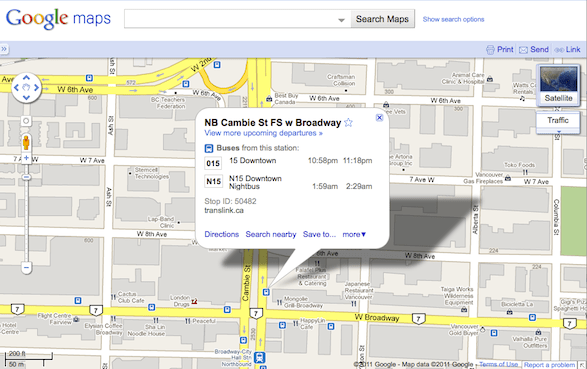

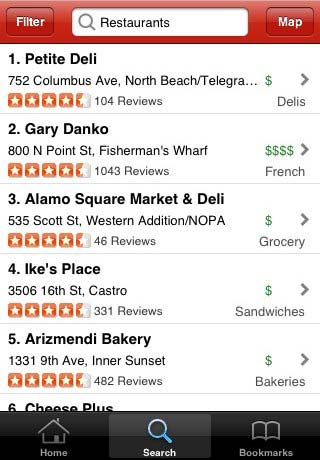

P.P.S. If you haven’t figured out the spelling mistake easter eggs, I’ll make it more obvious: click here. It’s an example of why internet bandwidth consumption is climbing at double digits.